I've had a vague plan to augment my coffee table with some media centre controls for a while now, but when my brother's partner bought two MakeyMakeys for my son I uh... "borrowed" one for myself. Today I finally kicked off this project by re-mapping the inputs to keys I wanted it to trigger, but hit a difficulty with non-standard media keys for volume control.

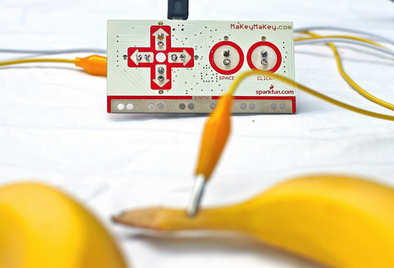

Essentially, the MakeyMakey presents itself as a USB HID device - like a keyboard/mouse combo - and you then hook up anything conductive to an input, and use that to complete a circuit through to ground which then triggers a key or mouse movement. My plan is to have some coins or brass discs embossed into the surface of the table, and use these as attractive (and toddler-proof, since there will be no moving parts) keys.

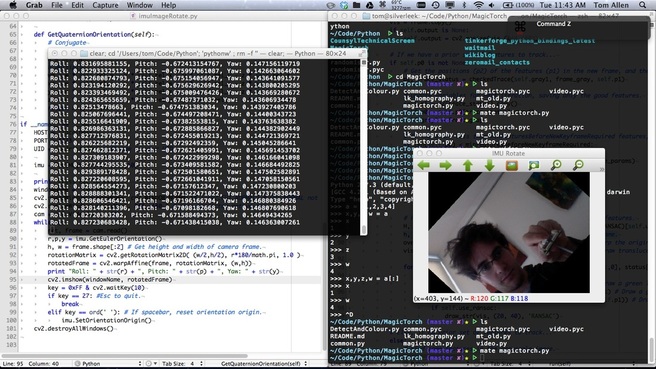

Unfortunately, under the hood the MakeyMakey is essentially an Arduino board, and the HID implementation is designed to help Arduino's target market - not embedded software engineers. One irritating feature is that they want users to be able to easily say something like sendKey( 'a' ) rather than sendKey( 0x61 ), and in their infinite wisdom they've managed to implement this in such a way that you actually cannot send anything other than an ASCII character or modifier key. Great, we can signal to our typewriters to reset their carriage feed, but not send a volume up command because typewriters only have two volume settings anyway; 'loud' and 'obsolete'. I swear that joke was more funny before I wrote it down.

At any rate, for my first ever Arduino program, I've gone in and edited the core library to workaround this. This commit shows the necessary changes to the MakeyMakey code, but the additions may well be helpful for any other Arduino based projects that require HID device work.

Essentially, the MakeyMakey presents itself as a USB HID device - like a keyboard/mouse combo - and you then hook up anything conductive to an input, and use that to complete a circuit through to ground which then triggers a key or mouse movement. My plan is to have some coins or brass discs embossed into the surface of the table, and use these as attractive (and toddler-proof, since there will be no moving parts) keys.

Unfortunately, under the hood the MakeyMakey is essentially an Arduino board, and the HID implementation is designed to help Arduino's target market - not embedded software engineers. One irritating feature is that they want users to be able to easily say something like sendKey( 'a' ) rather than sendKey( 0x61 ), and in their infinite wisdom they've managed to implement this in such a way that you actually cannot send anything other than an ASCII character or modifier key. Great, we can signal to our typewriters to reset their carriage feed, but not send a volume up command because typewriters only have two volume settings anyway; 'loud' and 'obsolete'. I swear that joke was more funny before I wrote it down.

At any rate, for my first ever Arduino program, I've gone in and edited the core library to workaround this. This commit shows the necessary changes to the MakeyMakey code, but the additions may well be helpful for any other Arduino based projects that require HID device work.

RSS Feed

RSS Feed