Yesterday I finally received my inertial measurement unit (IMU). This is a tiny unit containing a gyroscope, accelerometer, and magnetometer. The gyroscope measures angular rotational velocities - how fast the unit is rotating around three axes. The accelerometer measures the accelerations along each axis - how fast the unit's speed is changing along each axis. The magnetometer measures the effect of prevailing magnetic fields on the unit - assuming the magnetic fields around the unit are non-changing, this provides a coarse measure of the unit's orientation.

All of this information is mashed together in a Kalman Filter (more specifically, Seb Madgwick's implementation of Rob Mahony's Direction Cosine Matrix (DCM) filter.) This filter integrates the gyroscopic information to determine orientation, and minimises error via feedback from the double-integration of the accelerations and the magnetometer information. This page at TinkerForge describes the IMU unit in detail, and the underlying equations can be found in Madgwick, S. O., An efficient orientation filter for inertial and inertial/magnetic sensor arrays, University of Bristol, April 2010. Overall, and especially considering it costs less than $100, this unit is awesome!

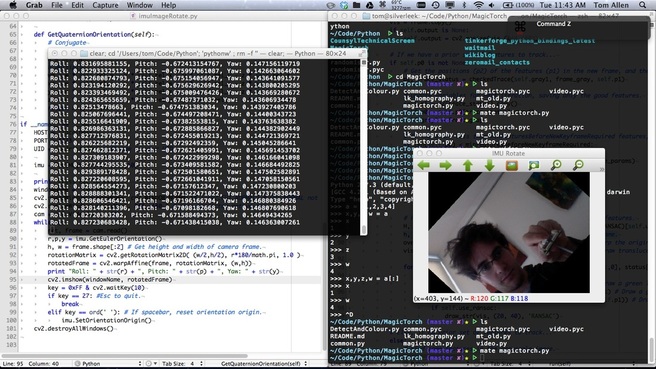

This particular IMU is actually built around a 32bit ARM processor, which does all the filter calculations onboard and processes USB commands to access the API. You simply run a daemon on your PC which translates TCP/IP commands to USB, and this then allows the manufacturer to have very simple APIs in a variety of languages, since they all just talk TCP/IP. Personally, I'm using Python because this project also makes heavy use of OpenCV which has Python bindings.

Ok, so what am I doing with it?

All of this information is mashed together in a Kalman Filter (more specifically, Seb Madgwick's implementation of Rob Mahony's Direction Cosine Matrix (DCM) filter.) This filter integrates the gyroscopic information to determine orientation, and minimises error via feedback from the double-integration of the accelerations and the magnetometer information. This page at TinkerForge describes the IMU unit in detail, and the underlying equations can be found in Madgwick, S. O., An efficient orientation filter for inertial and inertial/magnetic sensor arrays, University of Bristol, April 2010. Overall, and especially considering it costs less than $100, this unit is awesome!

This particular IMU is actually built around a 32bit ARM processor, which does all the filter calculations onboard and processes USB commands to access the API. You simply run a daemon on your PC which translates TCP/IP commands to USB, and this then allows the manufacturer to have very simple APIs in a variety of languages, since they all just talk TCP/IP. Personally, I'm using Python because this project also makes heavy use of OpenCV which has Python bindings.

Ok, so what am I doing with it?

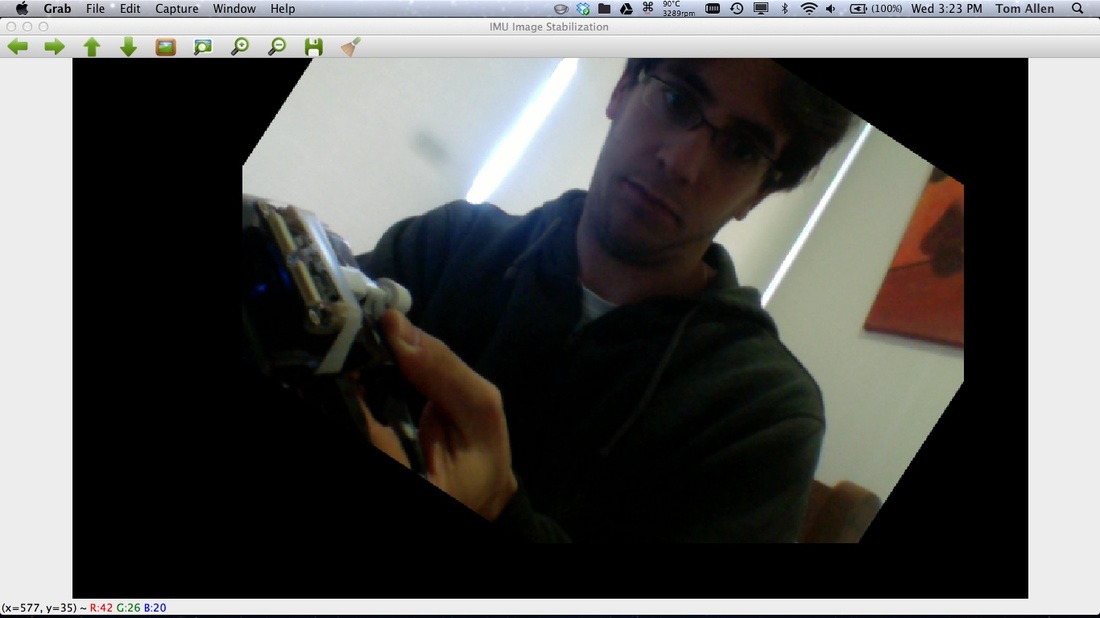

Well, first up, I stuck it to a laser projector. With tape.

Then I wrote some Python code to rotate and translate the image given the IMU's readings for orientation. In the image above, I'm taking my laptop's camera as the input image. I record a base orientation and then measure differences compared to this. The image gets rotated by the opposite of the change in roll. It gets moved left or right by the change in yaw, divided by the horizontal field of view angle, multiplied by the projector's horizontal number of pixels. Likewise, it's moved up or down by the change in pitch, divided by the vertical field of view angle, multiplied by the projector's vertical number of pixels.

For those readers who've not done IMU to sensor frame transformations before, this is one of the dodgiest hacks known to mankind. Despite this, it kinda sorta works.

For those readers who've not done IMU to sensor frame transformations before, this is one of the dodgiest hacks known to mankind. Despite this, it kinda sorta works.

This picture was awkward to take: My laptop is filming me. The projector is drawing the output on my wall. And I'm struggling to take the photo with my phone.

You know what - I'll just make a video... Stay tuned for part two! :-)

You know what - I'll just make a video... Stay tuned for part two! :-)

RSS Feed

RSS Feed